Adding Percussion to My Julia MIDI Synthesizer

Listen to A Day in the Life

Listen to some output from the synthesizer :)

Introduction

Previously, I created a MIDI synthesizer in Julia that combines additive synthesis with an amplitude envelope. This synthesizer reads multi-track MIDI files and renders them as WAV ouput. This post focuses on adding percussion support.

It turns out, making a hi-hat is surprisingly simple—just add random noise and shape it with an exponential decay, so I figured that I could experiment with random noise and envelopes to create percussive elements. Once I synthesized a couple of passable drums sounds, I decided to tackle how to treat note durations for percussive instruments as I had the inkling that I would have to adjust my code.

Are Drum Note Off events meaningless?

In MIDI, notes include both 'on' and 'off' events, which work intuitively for keyboard instruments: you press a key to generate sound and release it to stop. But with drums, does 'letting go' even make sense? I unfirmly believe that noise generated from drum hits remain consistent regardless of their musical length. A snare eighth note sounds the same as a snare quarter note. With this in mind, for the synthesis of drum instruments, I ignored note duration. So all the drums for the drumset instrument produce audio of the same length

After experimenting with different methods of generating percussive sounds, I opted for simplicity: a single function to handle all sound synthesis.

Sound Generation

The synthesize_drum() function is used for all synthesis:

- Generates oscillator components (Optional)

- Adds noise with optional filtering (Optional)

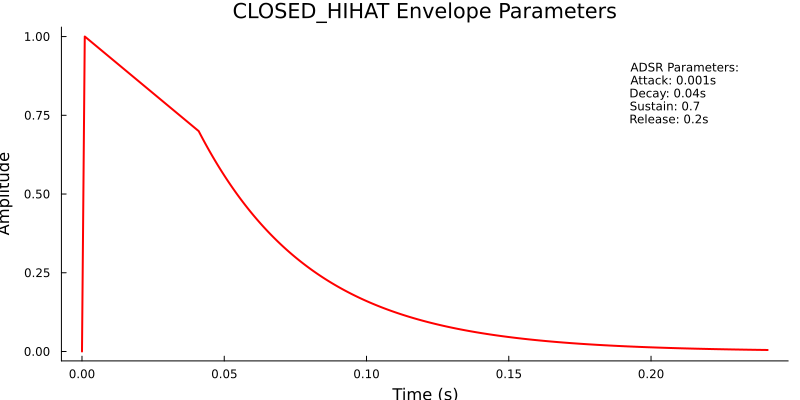

- Applies a dynamic ADSR envelope

- Normalizes the final signal

The synthesizer supports sound generation through two primary mechanisms:

Melodic Instruments:

- Use frequency multiplication to create rich harmonics

- Example: A base frequency of 440 Hz can generate additional frequencies at 880 Hz and 1320 Hz

- Weights control the amplitude of each harmonic component

Percussion Instruments:

- Direct frequency specification

- Weights control the amplitude of each harmonic component

All Instruments:

- Both have the same noise stage with options for noise level, filter cutoff, and filter design

Instrument Configuration Examples

Percussion Instrument Example:

"40": {

"name": "Electric Snare",

"parameters": {

"frequencies": [200.0, 400.0],

"weights": [0.7, 0.3],

"noise_level": 0.9,

"noise_cutoff": 2000.0,

"attack": 0.001,

"decay": 0.1,

"sustain": 0.0,

"release": 0.05

}

}

Melodic Instrument Example:

"Mallet": {

"parameters": {

"frequencies": [1, 2, 4, 6],

"weights": [1.0, 0.4, 0.15, 0.05],

"attack": 0.002,

"decay": 0.15,

"sustain": 0.0,

"sustain_time": 0.1,

"release": 0.3

}

}

For example, if synthesize_melodic_instrument is called on Mallet with a pitch of 440 Hz, it will summmate sine waves of 440, 440*2, 440*4, and 440*6, each with the respective weighted amplitudes.

MIDI Drum Mapping

In the MIDI specification, drumsets use midi notes 35 to 81 with specified drum instruments at each pitch. For example, a midi pitch of 70 corresponds to maracas.

With the basic sound generation mechanisms in place, I turned to optimizing instrument configurations. Rather than creating individual functions for each drum type, I implemented a parameterized approach:- I created a JSON configuration file to define instrument parameters such as attack, decay, and noise_level for each midi Drum pitch and also for melodic instruments

- If a drumset plays a note with pitch 40, it will look up parameters for 40, find "Electric Snare", and return

synthesize_drumwith the parameters found in the JSON file for that pitch. - A melodic instrument will use the parameters for the given instrument like "Mallet" It will substitute its Base frequency and also calculate sustain according to duration

Envelope Iteration

In my first synthesizer implementation, the release stage occurred during the noteOn event. I changed the release stage to start after the noteOff event, which is how release is typically implemented. I also modified the release stage of the envelope to use exponential decay. This approach creates more natural-sounding percussion, mimicking real-world sound decay.

Velocity and Pitch Scaling

Low pitched frequency notes were quieter than high frequency instruments. I implemented an exponential mapping function that adjusts note velocity based on pitch, amplifying lower-frequency sounds. Bass instruments still remained quiet, so I hardcoded a times-four multiplier for bass instruments. A more nuanced mixing setup would be ideal.

Caching and Performance Optimization

A caching mechanism was implemented to improve synthesis performance:

- Cache stores generated notes based on:

- Instrument name

- Note duration

- Velocity

- Pitch

This approach significantly reduces redundant computations during MIDI file rendering.

The current caching strategy could benefit from an LRU (Least Recently Used) approach to manage memory consumption, especially when rendering multiple MIDI files with diverse instrument sets. I don't recommend generating multiple MIDI files in the same code execution to avoid potential memory issues.

GitHub Repository Link

Usage Notes

Some MIDI files loaded from the internet behave unusually when imported directly into Julia, such as missing tempo or all tracks being merged into one. I found that loading the MIDI into MuseScore and then re-exporting it as MIDI resolves most issues. When testing the code, ensure that trackname(midi.tracks[1]) works and then map instruments accordingly.

The files for this project are synthesis_v2.jl, instruments_v2.jl,main_v2.jl, and parameters.json

Notes:

- I prompted LLMs to generate most of the parameters.

- I created a Julia http socket server and a JavaScript frontend in the early stages of this project so I could adjust parameters on the fly. This was helpful in selecting parameters

Output Examples

Every Breath You Take

Vordhosbn

Lloraras

Roygbiv

Further Improvements

Performance improvements

- Current processing is slow (2-30 seconds per MIDI file)

- Potential approaches include:

- Parallelization

- Batching note processing

- Multi-threaded track processing (with caution due to potential note count variability)

Sound Quality

- Drum mixing and loudness equalization

- Implement more audio generation techniques:

- FM synthesis

- Pitch envelope

- Sampling or wavetables for improved performance

Architecture

- Consider a backend serverless server (Lambda or ECS) with a frontend

- Explore sampling for practical implementation